“Real Attackers Don’t Compute Gradients”: Bridging the Gap Between Adversarial ML Research and Practice

At the Dagstuhl Seminar on Security of Machine Learning in July 2022, experts from all over the world met to discuss research trends and future directions for research in protecting ML-based systems. The seminar featured a mix of academics, young researchers, and industry practitioners.

Schloss Dagstuhl on Security of Machine Learning

At the Dagstuhl Seminar on Security of Machine Learning in July 2022, experts from all over the world met to discuss research trends and future directions for research in protecting ML-based systems. The seminar featured a mix of academics, young researchers, and industry practitioners. Despite the relaxed atmosphere, the seminar inspired diverse questions—among which, a recurring theme entailed the practical relevance of related research. For example, should industry truly be worried about the attacks portrayed in research papers, and are the assumptions made in research truly representative of the real world?

After the seminar, Dr. Fabio Pierazzi (KCL) was discussing with Dr. Giovanni Apruzzese (UniLi), about submitting a position paper to the newly born IEEE Conference on Secure and Trustworthy Machine Learning (SaTML), created by Dr. Nicolas Papernot (UofT) and Prof. Patrick McDaniel (Pennsylvania State University). “We really wanted to make some statements and recommendations after the preliminary discussions at Dagstuhl, so we decided to contact some other participants from industry to identify key points to share with the community, and they were excited as well”, Dr. Pierazzi explains. “It was originally planned to be a 5-page position paper, but it turned out to be a 26-page paper dense with insights and recommendations. I also truly loved that it was a truly collaborative effort among all authors, strongly driven by Giovanni.”

What the Paper is About

Motivated by the apparent gap between researchers and practitioners, the position paper [1] aims to bridge the two domains. The authors first present three real-world case studies from which we can glean practical insights unknown or neglected in research. Next, they analyze all adversarial ML papers recently published in top security conferences, highlighting positive trends and blind spots. Finally, they state positions on precise and cost-driven threat modeling, collaboration between industry and academia, and reproducible research.

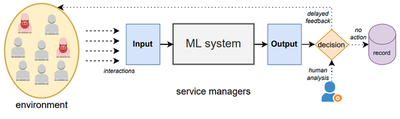

One of the major statements in the paper is to consider Machine Learning systems as a “whole”, more complex system, and not just an isolated Machine Learning model.

As a case study, the authors also present for the first time the architecture of the Facebook ML system for spam detection, which demonstrates a complexity that goes beyond the individual ML model, and that clarifies that real ML systems include many components, not necessarily all using ML. Attackers must bypass all components to be successful.

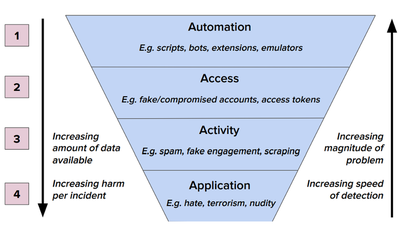

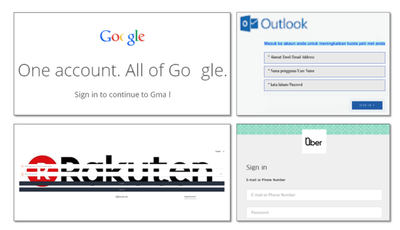

Another case study focuses on evaluating real-world datasets of website phishing. The analysis reveals that to evade phishing ML detectors, attackers employ tactics relying on cheap but effective methods that are unlikely to result from gradient computations.

The third case study based on an ML competition reveals that measuring attack efficiency with attacker “query counts” alone does not reveal the amount of time and domain expertise required to devise a successful offense. Indeed, in this competition, the attackers that spent more time were the ones with the least number of queries.

The paper finally makes and substantiates on several major positions, including:

- Threat models must precisely define the viewpoint of the attacker on every component of the ML system.

- Threat models must include cost-driven assessments for both the attacker and the defender.

- Practitioners and academics must actively collaborate to pursue their common goal of improving the security of ML systems.

- In ML security, source-code disclosure must be promoted with a just culture.

Spotlights

Since its acceptance was announced on Twitter, the paper received much attention, and it was praised by Dr. Kostantin Berlin (Head of Sophos AI), and summarized on a slide deck by Mr. Siva Kumar (Azure Data Science Team, Microsoft) and in a summary post by Moshe Kravchik. The paper will be presented on Feb 8, 2023 at IEEE SaTML by first author Dr. Giovanni Apruzzese.

References

[1] Giovanni Apruzzese, Hyrum Anderson, Savino Dambra, David Freeman, Fabio Pierazzi, Kevin Alejandro Roundy. Position: “Real Attackers Don’t Compute Gradients”: Bridging the Gap Between Adversarial ML Research and Practice. IEEE Conference on Secure and Trustworthy Machine Learning (SaTML), 2023 - Paper website